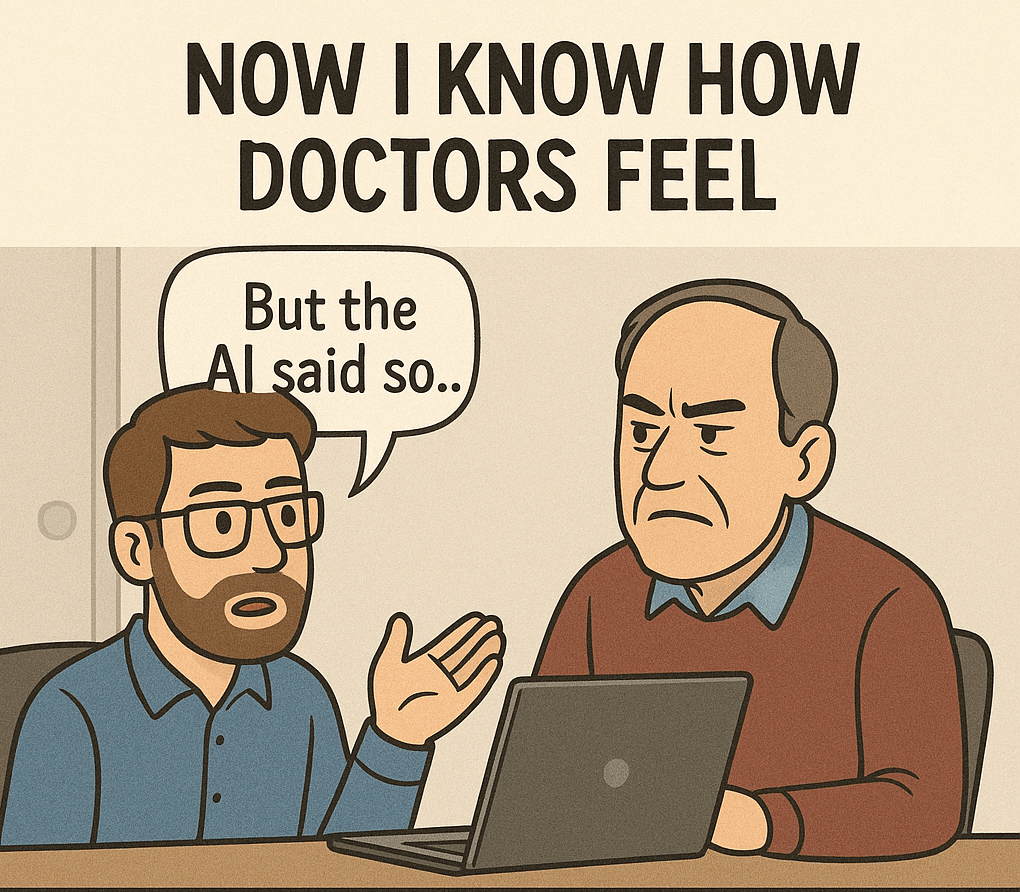

With all these AI-based code generators floating around, I’ve started to feel a strange sense of sympathy for doctors.

You know how patients walk in, quote some random article from Google, and then challenge the diagnosis? Yeah… that’s happening now – in tech.

Lately, I’ve had Data Engineers come with unusual bugs or performance issues. These aren’t beginners – they know their stuff. But after hours of trying to figure out what’s going on, I ask them:

“Where did this syntax/logic come from?”

The answer?

“AI tool <name>”

Sometimes the script isn’t even valid for the platform we’re using. But because it looks correct, there’s an assumption that the platform or library must be broken.

What I’m starting to notice is a shift in mindset.

Earlier, we used to write code based on what’s available in the platform. Now, it’s the other way around—we write code based on what AI suggests and then expect the platform to support it.

That’s the transformation. (not Data transformation)

Write first, validate later.

Assume the tool is wrong, not the code.

And that’s where I feel the pain doctors face. We’ve entered the era of “AI-generated confidence” without the checks and balances.

Now I get it, (Sorry) Doc.

Leave a comment